Mark's recent blogpost on Raring community

skunkworks got me

thinking. I agree it would be unfair to spin this story as

Canonical/Ubuntu switching to closed development. I also agree that (as

the damage control messaging was quick to point

out) inviting some

members of the community to participate in closed development projects

is actually a step towards more openness rather than a step backwards.

That said, it certainly is making the "closed development" option more

official and organized, which is not a step in the right direction in my

opinion. It reinforces it as a perfectly valid option, while I would

really like it to be an exception for corner cases. So at this point, it

may be useful to insist a bit on the benefits of open development, and

why dropping them might not be that good of an idea.

Open Development is a transparent way of developing software, where

source code, bugs, patches, code reviews, design discussions, meetings

happen in the open and are accessible by everyone. "Open Source" is a

prerequisite of open development, but you can certainly do open source

without doing open development: that's what I call the Android model

and what others call Open behind walls model. You can go further than

open development by also doing "Open Design": letting an open community

of equals discuss and define the future features your project will

implement, rather than restricting that privilege to a closed group of

"core developers".

Open Development allows you to "release early, release often" and get

the testing, QA, feedback of (all) your users. This is actually a good

thing, not a bad thing. That feedback will help you catch corner cases,

consider issues that you didn't predict, get outside patches. More

importantly, Open Development helps lowering the barrier of entry for

contributors to your project. It blurs the line between consumers and

producers of the software (no more "us vs. them" mentality),

resulting in a much more engaged community. Inviting select individuals

to have early access to features before they are unveiled sounds more

like a proprietary model beta testing program to me. It won't give you

the amount of direct feedback and variety of contributors that open

development gives you. Is the trade-off worth it ?

How much as I dislike the Android model, I understand that the ability

for Google to give some select OEMs a bit of advance has some value.

Reading Mark's post though, it seems that the main benefits for Ubuntu

are in avoiding early exposure of immature code and get more splash PR

effect at release time. I personally think that short-term, the drop in

QA due to reduced feedback will offset those benefits, and long-term,

the resulting drop in community engagement will also make this a bad

trade-off.

In OpenStack, we founded the project on the Four

Opens: Open Source, Open Development,

Open Design and Open Community. This early decision is what made

OpenStack so successful as a community, not the "cloud" hype. Open

Development made us very friendly to new developers wanting to

participate, and once they experienced Open Design (as exemplified in

our Design Summits) they were sold and turned into advocates of our

model and our project within their employing companies. Open Development

was really instrumental to OpenStack growth and adoption.

In summary, I think Open Development is good because you end up

producing better software with a larger and more engaged community of

contributors, and if you want to drop that advantage, you better have a

very good reason.

Next week our community will gather in always-sunny San Diego for the

OpenStack Summit. Our

usual Design Summit is now a part of

the general event: the Grizzly Design

Summit sessions will run over

the 4 days of the event ! We start Monday at 9am and finish Thursday at

5:40pm. The schedule is now up at:

http://openstacksummitfall2012.sched.org/overview/type/design+summit

This link will only show you the Design Summit sessions. Click

here for the complete

schedule. Minor scheduling changes may still happen over the next days

as people realize they are double-booked, but otherwise it's pretty

final now.

For newcomers, please note that the Design Summit is different from

classic conferences or the other tracks of the OpenStack Summit. There

are no formal presentations or speakers. The sessions at the Design

Summit are open discussions between contributors on a specific

development topic for the upcoming development cycle, moderated by a

session lead. It is possible to prepare a few slides to introduce the

current status and kick-off the discussion, but these should never be

formal speaker-to-audience presentations.

I'll be running the Process

topic,

which covers the development process and core infrastructure

discussions. It runs Wednesday afternoon and all Thursday, and we have a

pretty awesome set of stuff to discuss. Hope to see you there!

If you want to talk about something that is not covered elsewhere in the

Summit, please note that we'll have an Unconference room, open from

Tuesday to Thursday. You can grab a 40-min slot there to present

anything related to OpenStack! In addition to that, we'll also have

5-min Lightning talks after lunch on Monday-Wednesday... where you

can talk about anything you want. There will be a board posted on the

Summit floor, first come, first serve :)

More details about the Grizzly Design Summit can be found on the

wiki. See you all soon!

It's been a long time since my last blog post... I guess that cycle was

busier for me than I expected, due to my involvement in the Foundation

technical Committee setup.

Anyway, we are now at the end of the 6-month Folsom journey for

OpenStack core projects, a ride which involved more than 330

contributors, implementing 185 features and fixing more than

1400 bugs in core projects alone !

At release day -1 we have OpenStack 2012.2 ("Folsom") release candidates

published for all the components:

- OpenStack Compute (Nova), at

RC3

- OpenStack Networking (Quantum), at

RC3

- OpenStack Identity (Keystone), at

RC2

- OpenStack Dashboard (Horizon), at

RC2

- OpenStack Block Storage (Cinder), at

RC3

- OpenStack Storage (Swift) at version

1.7.4

We are expecting OpenStack Image Service (Glance)

RC3 later today !

Unless a critical, last-minute regression is found today in these

proposed tarballs, they should form the official OpenStack 2012.2

release tomorrow ! Please take them for a last regression test ride, and

don't hesitate to ping us on IRC (#openstack-dev @ Freenode) or file

bugs (tagged folsom-rc-potential) if you think you can convince us to

reroll.

This Thursday we will publish our second milestone of the Folsom cycle

for Nova. It will include a number of new

features, including the

one I worked on: a new, more configurable and extensible nova-rootwrap

implementation. Here is what you should know about it, depending on

whether you're a Nova user, packager or developer !

Architecture

Purpose

The goal of the root wrapper is to allow the nova unprivileged user to

run a number of actions as the root user, in the safest manner

possible. Historically, Nova used a specific sudoers file listing

every command that the nova user was allowed to run, and just used

sudo to run that command as root. However this was difficult to

maintain (the sudoers file was in packaging), and did not allow for

complex filtering of parameters (advanced filters). The rootwrap was

designed

to solve those issues.

How rootwrap works

Instead of just calling sudo make me a sandwich, Nova calls sudo

nova-rootwrap /etc/nova/rootwrap.conf make me a sandwich. A generic

sudoers entry lets the nova user run nova-rootwrap as root.

nova-rootwrap looks for filter definition directories in its

configuration file, and loads command filters from them. Then it

checks if the command requested by Nova matches one of those filters, in

which case it executes the command (as root). If no filter matches, it

denies the request.

Security model

The escalation path is fully controlled by the root user. A sudoers

entry (owned by root) allows nova to run (as root) a specific

rootwrap executable, and only with a specific configuration file (which

should be owned by root). nova-rootwrap imports the Python modules it

needs from a cleaned (and system-default) PYTHONPATH. The configuration

file (also root-owned) points to root-owned filter definition

directories, which contain root-owned filters definition files. This

chain ensures that the nova user itself is not in control of the

configuration or modules used by the nova-rootwrap executable.

Rootwrap for users: Nova configuration

Nova must be configured to use nova-rootwrap as its root_helper. You

need to set the following in nova.conf:

root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf

The configuration file (and executable) used here must match the one

defined in the sudoers entry (see below), otherwise the commands will

be rejected !

Rootwrap for packagers

Sudoers entry

Packagers need to make sure that Nova nodes contain a sudoers entry

that lets the nova user run nova-rootwrap as root, pointing to the

root-owned rootwrap.conf configuration file and allowing any parameter

after that:

nova ALL = (root) NOPASSWD: /usr/bin/nova-rootwrap /etc/nova/rootwrap.conf *

Filters path

Nova looks for a filters_path in rootwrap.conf, which contains

the directories it should load filter definition files from. It is

recommended that Nova-provided filters files are loaded from

/usr/share/nova/rootwrap and extra user filters files are loaded

from /etc/nova/rootwrap.d.

[DEFAULT]

filters_path=/etc/nova/rootwrap.d,/usr/share/nova/rootwrap

Directories defined on this line should all exist, be owned and

writeable only by the root user.

Filter definitions

Finally, packaging needs to install, for each node, the filters

definition file that corresponds to it. You should not install any

other filters file on that node, otherwise you would allow extra

unneeded commands to be run by nova as root.

The filter file corresponding to the node must be installed in one of

the filters_path directories (preferably /usr/share/nova/rootwrap).

For example, on compute nodes, you should only have

/usr/share/nova/rootwrap/compute.filters. The file should be owned and

writeable only by the root user.

All filter definition files can be found in Nova source code under

etc/nova/rootwrap.d.

Rootwrap for plug-in writers: adding new run-as-root commands

Plug-in writers may need to have the nova user run additional commands

as root. They should use nova.utils.execute(run_as_root=True) to

achieve that. They should create their own filter definition file and

install it (owned and writeable only by the root user !) into one of

the filters_path directories (preferably /etc/nova/rootwrap.d). For

example the foobar plugin could define its extra filters in a

/etc/nova/rootwrap.d/foobar.filters file.

The format of the filter file is defined

here.

Rootwrap for core developers

Adding new run-as-root commands

Core developers may need to have the nova user run additional commands

as root. They should use nova.utils.execute(run_as_root=True) to

achieve that, and add a filter for the command they need in the

corresponding etc/nova/rootwrap.d/ .filters file in Nova's source code.

For example, to add a command that needs to be tun by network nodes,

they should modify the etc/nova/rootwrap.d/network.filters file.

The format of the filter file is defined

here.

Adding your own filter types

The default filter type, CommandFilter, is pretty basic. It only checks

that the command name matches, it does not perform advanced checks on

the command arguments. A number of other more command-specific filter

types are available, see

here.

That said, you can easily define new filter types to further control

what exact command you actually allow the nova user to run as root.

See nova/rootwrap/filters.py for details.

This documentation, together with a reference section detailing the file

formats, is available on the

wiki.

How did the OpenStack Bug Triage day we organized

yesterday go ? Did

organizing an event make a difference ? Here are the results !

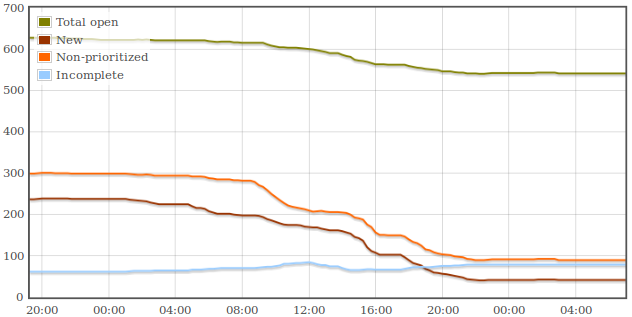

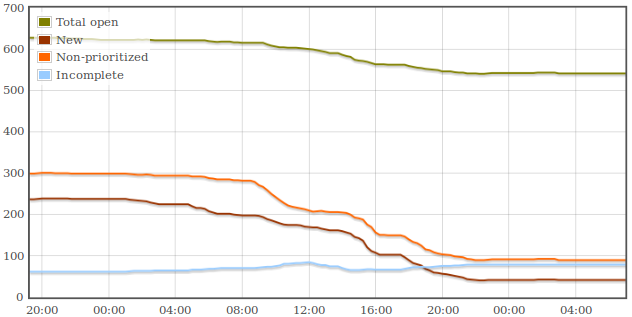

Nova has more bugs than all the other core projects combined, and the

most slack to clean up. We went from 237 "New" bugs at the beginning of

the day and down to 42, a completion rate of 82%. In the mean time we

managed to close permanently 86 open bugs over a total of 627:

So the BugTriage day definitely made a difference ! Congrats to all the

participants ! It leaves our bug tracker in a lot better shape, and

created a momentum around bug triaging and having an up-to-date database

of known issues.

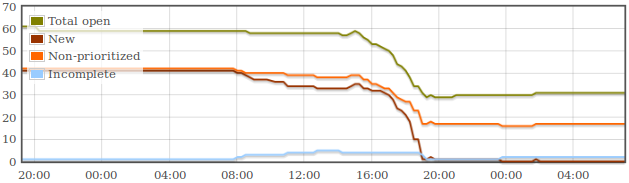

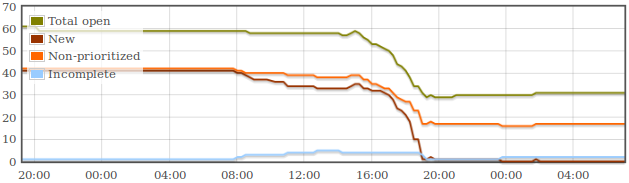

The success is even more obvious on smaller projects, with Glance,

Keystone and Quantum all managing to complete all

BugTriage tasks in the day ! See

for example the results for Quantum:

See you all for our next BugDay...

which will most likely be a Bug Squashing Day (close as many bugs as

possible) shortly after folsom-2.